SHARE

Rerankers are critical components in production retrieval systems. They refine initial search results by re-scoring query-document pairs, dramatically improving precision without sacrificing recall. For a deep dive into how rerankers work and when to use them, check out our introduction to rerankers.

This guide focuses on a newer capability: instruction-following rerankers. These models accept natural language instructions that guide the ranking process, letting you encode domain expertise, business logic, and user preferences directly into your retrieval pipeline, without retraining models or building complex rule engines.

We'll cover what makes instruction-following rerankers different, their main use cases, failure modes to avoid, and how to write effective prompts for zerank-2.

What is an Instruction-Following Reranker?

Traditional rerankers score documents based purely on query-document similarity. They're excellent at surface-level and semantic matching, but they can't adapt to context-specific requirements.

Instruction-following rerankers change this. They accept standing instructions alongside your queries: think of these as persistent configuration that shapes how all queries get ranked for a particular use case or customer.

How This Differs from Traditional Reranking

With zerank-2, you can now append specific instructions, lists of abbreviations, business context, or user-specific memories to influence how results get reranked.

Here is an example below.

The instructions provide persistent context about your domain, priorities, and constraints that inform every ranking decision.

Main Use Cases Of Instruction-Following Rerankers

Domain-specific retrieval: Encode industry expertise into ranking. A legal tech application might use: "Prioritize statutes and regulations over case law. Documents from .gov sources rank higher than legal blogs or forums."

Multi-tenant systems: Different customers need different ranking behavior. A document that's highly relevant for one customer may be noise for another. Instructions let you customize without maintaining separate models.

Source and format prioritization: "Prioritize structured data and tables over prose. Official documentation ranks higher than Slack messages or email threads." This is especially valuable when your corpus mixes authoritative and informal sources.

Temporal and recency preferences: "Strongly prefer documents from 2024 onwards. Our architecture changed completely in January 2024, making older documentation obsolete." Unlike hard date filters, this allows flexibility while setting clear preferences.

Disambiguation through business context: Many terms are polysemic. "Apple" means different things for grocery marketplace versus a quant fund. "Jaguar" could be an animal, a car brand, or a legacy software system. Instructions clarify which interpretation matters.

How to Prompt zerank-2

zerank-2 accepts instructions in flexible natural language. Use whatever formatting makes your prompts maintainable—XML tags, markdown, plain text all work. The model is trained to understand various formatting conventions.

Basic structure:

Or more simply:

Two Types of Instructions

Meta Instructions: How to Assess Results

Meta instructions define evaluation criteria: what features matter, what to prioritize, and how to weight different aspects.

Format and source prioritization:

Temporal preferences:

Emphasis on query components:

Business Instructions: Domain Context

Business instructions provide the context for what you're trying to achieve, industry knowledge, company-specific terminology, user preferences, and domain expertise.

Industry context:

Terminology disambiguation:

Company-specific context:

User preferences and constraints:

Contextual relevance:

Combining Both Types

The most effective instructions combine meta and business guidance:

Per-Customer Customization with Templates

In multi-tenant systems, you can create base instructions and customize them per customer using prompt templates:

This approach lets you maintain consistent base logic while adapting to individual customer needs, preferences, and knowledge.

Failure Modes

Overly generic instructions: "Rerank results based on relevance" doesn't provide any actionable guidance. Instructions need specificity.

Conflicting directives: "Prioritize recent documents" combined with "prioritize comprehensive technical depth" creates tension when the most thorough documentation is older. Be explicit about how to resolve these trade-offs.

Using instructions to fix poor retrieval: If relevant documents aren't in your initial recall set, reranking can't surface them. Fix retrieval first, then refine with reranking.

Impact of Instructions: Concrete Examples

Example 1: Healthcare Document Retrieval

Query: "patient consent requirements"

Without instructions:

With instructions:

The instruction shifts focus from general medical ethics to the specific regulatory framework that software builders need.

Example 2: Technical Documentation Search

Query: "how to handle rate limiting"

Without instructions:

With instructions:

Example 3: Candidate Search with Domain Context

Query: "engineers with cloud experience"

Without instructions:

With instructions:

Same query, completely different interpretation based on what "cloud experience" means in your context.

Example 4: Multi-Source Enterprise Search

Query: "Q3 revenue targets"

Without instructions:

With instructions:

Conclusion

Instruction-following rerankers are a paradigm shift in how we build retrieval systems. Instead of building separate pipelines for different domains or customers, you can use a single reranker with different standing instructions.

Key principles for AI engineers:

Combine meta and business instructions: Tell the reranker both how to evaluate documents and what domain context matters. Neither alone is as powerful as both together.

Use templates for multi-tenant systems: Create base instructions and potentially customize per customer by interpolating user-specific memory, preferences, and constraints.

Instructions amplify good retrieval: They refine results, but can't fix fundamentally poor recall. Ensure your first-stage retrieval (BM25 + vector search) is solid before optimizing reranking.

Iterate based on failure modes: Start with straightforward instructions and observe where the reranker struggles. Refine your prompts based on these failure cases.

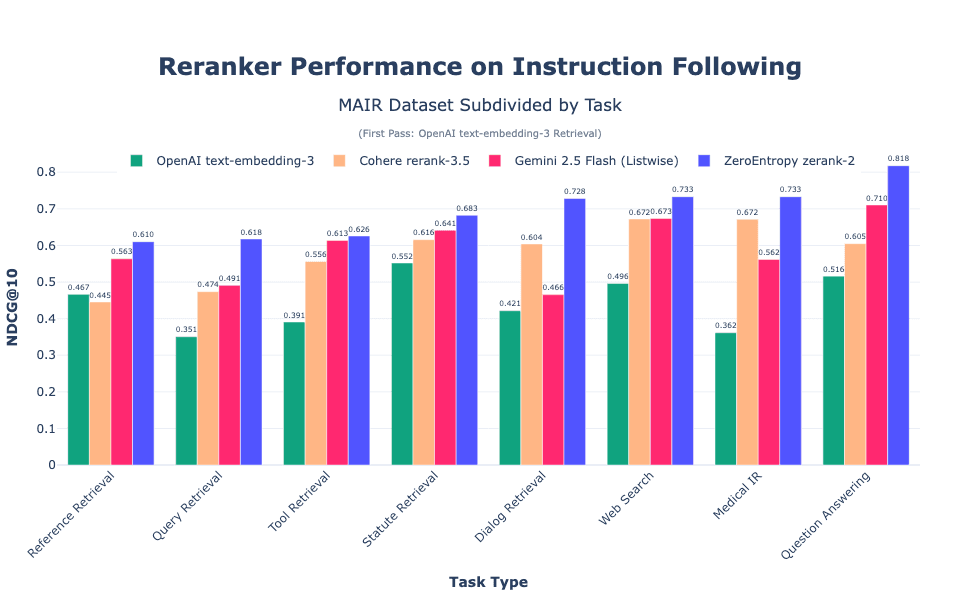

zerank-2's native instruction-following turns reranking from a static similarity computation into a context-aware, domain-informed ranking process. Whether you're building enterprise search, AI agents, or vertical SaaS applications, instruction-following rerankers should be a core component of your stack.

Get started with

RELATED ARTICLES